Mastering Reinforcement Learning Basics

The Complete Guide to Learning and Implementing Reinforcement Learning Basics

What is Reinforcement Learning?

Reinforcement Learning (RL) is a type of Machine Learning where an agent learns to make decisions by interacting with an environment. The agent receives rewards or penalties based on its actions, and its goal is to maximize total reward over time.

Real-Life Analogy:

Think of teaching a dog to fetch a ball. Every time the dog brings the ball back, you give it a treat. Over time, the dog learns that fetching the ball leads to a reward, so it keeps doing it.

What is an Environment in Reinforcement Learning?

An environment is the world in which an agent operates and learns by interacting.

It defines:

What the agent sees (observations or state),

What the agent can do (actions),

What the agent gets in return (rewards),

And how things change as a result of those actions.

Environment = Game Rules + State Machine

You can think of it like a video game engine:

The environment handles the game rules.

The agent plays the game by making decisions.

Real-life Example: FrozenLake-v1 (Environment)

Let’s say the agent is a character trying to reach a goal without falling into a hole on an icy lake.

State (observation): Current position on the lake grid (e.g., (0,0))

Action: Move up, down, left, or right

Reward: +1 for reaching the goal, 0 otherwise

Environment: Controls what happens when the agent takes a step.

So:

The environment knows where the holes are, what the goal is, and how the world responds to actions.

The agent doesn’t — it has to learn by trial and error.

Why Do We Need the Environment?

Because:

✅ 1. Interaction Is the Core of RL

There is no learning without an environment. The agent needs to see what happens when it acts.

Environment gives feedback (reward, next state) after every action.

✅ 2. It Defines the Problem to Solve

Different environments define different challenges:

Balancing a pole (CartPole)

Navigating a maze (FrozenLake)

Playing a game (Atari, Chess)

Driving a car (Autonomous driving sims)

✅ 3. Helps Train & Evaluate Agents

Standard environments (like in Gymnasium or OpenAI Gym) provide:

Reproducible tasks

Benchmarks for performance

Easy interfaces to plug your agent in

Environment-Agent Interaction Loop

Repeat for each step:

Agent observes current state

Agent chooses action

Environment applies action

Environment returns:

new state

reward

done or not

Simple Analogy:

Agent = You, a student.

Environment = A quiz app.

Every time you pick an answer (action), the app tells you:

✅ Correct or ❌ wrong (reward),

Shows the next question (next state),

Ends the quiz when complete (done).

What is a State Machine?

A state machine (also called a finite state machine or FSM) is a model of a system that can be in one of many predefined states at a time and switches between them based on some inputs or rules.

Key Concepts:

State: A specific situation or configuration.

Event/Input/Action: Something that causes a change.

Transition: The rule that defines how to move from one state to another.

Start State: Where the machine begins.

End State (optional): Where the machine stops.

FrozenLake Example (Reinforcement Learning)

Let’s connect this to RL.

Environment = State Machine

States: Positions on the ice grid like S0, S1, ..., G (Goal), H (Hole)

Actions: Left, Right, Up, Down

Transitions:

If agent is in S0 and goes right → moves to S1

If agent is in S3 and goes down → falls into hole H = ends episode

The environment manages this—it’s like a state machine that changes the state based on what the agent does.

Why Is This Important in RL?

The environment (like CartPole, FrozenLake, etc.) acts like a state machine:

The agent provides actions.

The environment transitions to the next state, gives a reward, and tells if it's done.

This forms the interaction loop for learning.

Analogy:

Think of a vending machine:

States: Waiting for coins, item selected, dispensing

Input: Coin, button press

Transitions: Insert coin → select item → dispense item → reset to waiting

Types of Environments

In Reinforcement Learning (RL), environments come in different types depending on the goal, complexity, and type of interaction. Below is a simple breakdown of the main types of RL environments with examples and their purpose:

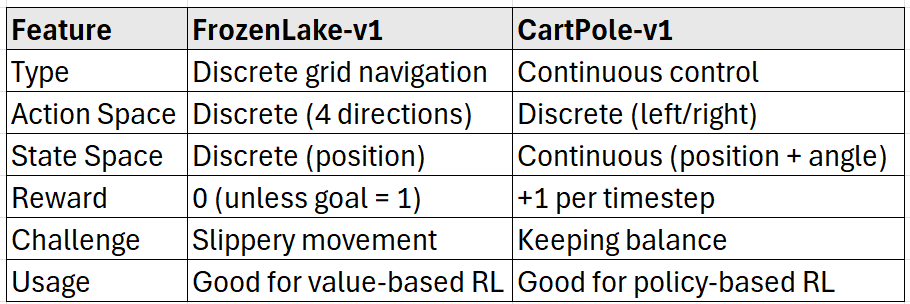

1. Classic Control Environments

These are simple, physics-based problems used for learning and benchmarking algorithms.

2. Toy Text / Grid-World Environments

These are discrete environments often used for value-based methods like Q-learning.

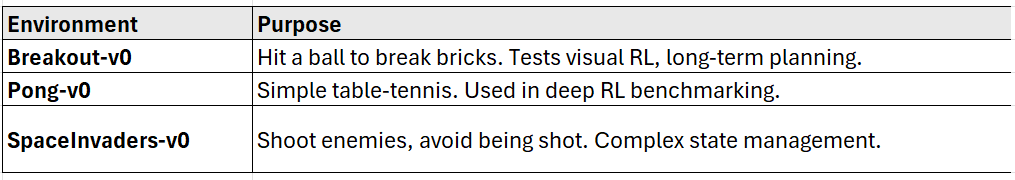

3. Atari Environments

Used to test agents on visual input and complex game strategies.

4. Physics Simulated Environments (Mujoco, PyBullet)

Used for continuous control, robotics, and advanced physics simulations.

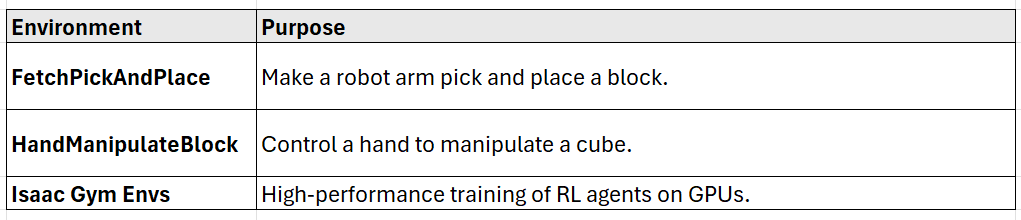

5. Robotics Environments (OpenAI Gym Robotics, Isaac Gym)

Real-world robotic tasks simulated in software.

6. Games & Strategy Environments

Used to train agents in decision-making, planning, and strategy.

7. Custom/Real-World Simulated Environments

Used for research and industrial applications.

Summary

Core Reinforcement Learning Algorithms and Strategies

1. Q-Learning & Bellman Equation

Concept:

Q-Learning is a value-based RL algorithm.

It learns the best action to take in a given state using a Q-table (state-action value table).

The Bellman Equation is a formula used to update Q-values based on expected future rewards.

🔁 Bellman Equation (in simple words):

"The value of a state = immediate reward + value of the next best state (discounted)."

Real-Time Use Cases:

Autonomous Warehouse Robots

Robots learn optimal paths to pick and place items without collisions.

Elevator Scheduling

Deciding which elevator should respond to which floor request to minimize wait time.

Traffic Signal Control

Controlling traffic lights to reduce overall waiting time at intersections.

Video Game AI

Game agents learning to survive, collect rewards, and defeat enemies by trial-and-error.

Energy Grid Optimization

Adjusting energy distribution in smart grids based on consumption patterns.

2. Multi-Armed Bandits for Decision Making

Concept:

Think of a slot machine (bandit) with multiple arms.

Each arm gives a different unknown reward.

The goal is to find the best arm (decision) to pull for maximum reward, balancing between:

Exploration: Try different arms to gather information.

Exploitation: Stick with the best-known arm.

Key Idea: Explore vs Exploit Dilemma

Real-Time Use Cases:

Online Advertisement Selection (AdTech)

Choosing which ad to show to users based on previous clicks.

News Article Recommendation

Displaying the most engaging article out of multiple options for a user.

Clinical Trials

Dynamically assigning treatments to patients while maximizing overall trial success.

E-commerce Product Placement

Deciding which product tiles to show on a home page to maximize conversion.

Email Marketing Campaigns

Choosing the best subject line or email content to improve open and click rates.

3. Policy Gradients (REINFORCE Algorithm)

Concept:

Instead of learning values like Q-learning, policy gradients learn the policy directly (i.e., the probability of taking an action in a state).

The REINFORCE algorithm is a foundational policy gradient method.

It updates the policy parameters based on the reward obtained after each episode.

Real-Time Use Cases:

Robotic Manipulation

Learning to grasp, lift, or pour liquids precisely using trial-based learning.

Drone Navigation

Teaching drones to fly through obstacles or deliver packages using optimal paths.

Autonomous Trading Bots

Bots learning investment strategies to maximize returns in dynamic stock markets.

Personalized Exercise Coaching

Tailoring workouts in real-time based on user feedback and progress.

AI for Surgical Assistance

Learning best motion sequences for robotic arms assisting human surgeons.

4. Actor-Critic Methods

Concept:

Actor-Critic methods use two models working together:

🎭 Actor: Decides what action to take (i.e., the policy).

🧮 Critic: Evaluates how good that action was (i.e., value function).

This is like a player and a coach:

The player (Actor) takes actions in a game.

The coach (Critic) gives feedback on how well the player is doing.

How It Works:

The Actor picks an action based on current policy.

The environment returns a reward and new state.

The Critic calculates the advantage (difference between expected and actual reward).

Both the Actor and Critic are updated:

Actor improves policy based on advantage.

Critic updates its value estimates.

Why Actor-Critic?

✅ Combines benefits of:

Policy Gradient methods (like REINFORCE) which are good for continuous action spaces.

Value-Based methods (like Q-Learning) which are more sample efficient.

✅ More stable and faster learning than pure REINFORCE.

Real-Time Use Cases:

Self-Driving Cars

Making real-time decisions on steering, acceleration, and braking based on road conditions.

Industrial Process Automation

Adjusting machinery parameters in factories to optimize output and reduce waste.

Virtual Personal Assistants

Improving dialog generation by learning from user responses and preferences.

Smart HVAC Systems

Balancing energy use and user comfort in real-time across seasons and times of day.

Sports Strategy Optimization (e.g., AI Coaches)

Suggesting dynamic plays or substitutions in sports based on match situations.

FrozenLake-v1

Goal:

The agent (you) needs to move from the Start (S) to the Goal (G) by navigating a frozen lake without falling into holes (H).

Environment Layout (4x4 grid):

S F F F

F H F H

F F F H

H F F GS: StartF: Frozen surface (safe)H: Hole (danger, game over)G: Goal

Actions:

0: LEFT1: DOWN2: RIGHT3: UP

Challenges:

If

is_slippery=True, your actions may not always work as intended (adds randomness like ice).You must learn a policy that maximizes the chance of reaching the goal.

CartPole-v1

Goal:

Keep a pole balanced upright on a moving cart for as long as possible by applying forces left or right.

How it works:

The environment gives you a state (position, velocity, pole angle, angular velocity).

You need to apply force to either move the cart left (0) or right (1).

If the pole falls too far or the cart goes out of bounds, the episode ends.

Reward:

You get

+1reward for every timestep the pole is still up.The max episode length is 500 steps.

For more in-depth technical insights and articles, feel free to explore:

Girish Central

LinkTree: GirishHub – A single hub for all my content, resources, and online presence.

LinkedIn: Girish LinkedIn – Connect with me for professional insights, updates, and networking.

Ebasiq

Substack: ebasiq by Girish – In-depth articles on AI, Python, and technology trends.

Technical Blog: Ebasiq Blog – Dive into technical guides and coding tutorials.

GitHub Code Repository: Girish GitHub Repos – Access practical Python, AI/ML, Full Stack and coding examples.

YouTube Channel: Ebasiq YouTube Channel – Watch tutorials and tech videos to enhance your skills.

Instagram: Ebasiq Instagram – Follow for quick tips, updates, and engaging tech content.

GirishBlogBox

Substack: Girish BlogBlox – Thought-provoking articles and personal reflections.

Personal Blog: Girish - BlogBox – A mix of personal stories, experiences, and insights.

Ganitham Guru

Substack: Ganitham Guru – Explore the beauty of Vedic mathematics, Ancient Mathematics, Modern Mathematics and beyond.

Mathematics Blog: Ganitham Guru – Simplified mathematics concepts and tips for learners.