LLM Model Evaluation Techniques: A Deep Dive into Offline and Online Assessment Strategies

Exploring Core Evaluation Metrics for LLMs: From Benchmarks to User Feedback

Large Language Models (LLMs) like GPT, PaLM, Claude, and LLaMA are powering a new generation of AI applications across industries. However, building an LLM is only half the challenge — evaluating it effectively is just as critical.

Model evaluation ensures that the generated outputs are not only accurate and fluent, but also aligned with user intent, safe, and reliable across real-world contexts. As LLMs become more complex and serve high-stakes applications in healthcare, law, education, and finance, the evaluation techniques must evolve beyond surface-level benchmarks.

In this technical deep dive, we explore how LLMs are evaluated in two broad domains — Offline Evaluation and Online Evaluation. We further break down discriminative vs. generative tasks, explain the most widely used metrics like BLEU, ROUGE, METEOR, and Perplexity, and explore how real-time user feedback and engagement drive online evaluation.

This guide is tailored for practitioners, researchers, AI developers, and product leaders who want to understand how to assess LLMs end-to-end — from benchmark scores to production performance.

I. OFFLINE EVALUATION (Static, Pre-Deployment Testing)

Definition:

Offline evaluation refers to the evaluation of LLMs using fixed datasets and standardized benchmarks, without human interaction in real time. It is controlled, reproducible, and interpretable, but may not fully reflect real-world usage.

Offline evaluation can be broadly divided into two subtypes based on the nature of the task:

A. Discriminative Tasks (Classification/Ranking)

What are they?

These tasks require the model to choose the best option from a set of candidates or predict labels from a fixed space.

Common Use Cases:

Multiple Choice QA (e.g., MMLU)

Sentence similarity (e.g., STS Benchmark)

Natural Language Inference (e.g., MNLI, RTE)

Next Token Prediction (e.g., perplexity benchmarks)

Entity recognition and classification

Evaluation Metrics:

Accuracy: % of correct predictions

Precision / Recall / F1 Score: For imbalanced classes or multi-label classification

Top-k Accuracy: When multiple predictions are acceptable (e.g., Top-3)

Why Important:

Discriminative tasks help benchmark the core understanding and reasoning ability of the LLM in controlled conditions, especially where precise answers matter (e.g., legal, medical).

B. Generative Tasks (Free-form Text Generation)

What are they?

Generative tasks require the model to generate open-ended, free-form content without predefined answer choices.

Common Use Cases:

Summarization (e.g., CNN/DailyMail)

Machine Translation (e.g., WMT datasets)

Dialogue systems (e.g., ConvAI)

Code generation (e.g., HumanEval, MBPP)

Open-ended Q&A (e.g., TriviaQA)

Evaluation Metrics:

Challenges:

Multiple valid outputs (e.g., paraphrasing) make scoring subjective.

Metrics like BLEU/ROUGE don’t fully capture coherence, factual accuracy, or logical consistency.

Hence, human evaluation or preference modeling is sometimes necessary.

II. ONLINE EVALUATION (Dynamic, Post-Deployment Testing)

Definition:

Online evaluation involves monitoring and evaluating the LLM in real-world usage, with actual user inputs and behavioral data. It complements offline metrics with user-centric metrics such as usefulness, satisfaction, and task success.

How It Works:

Model is deployed in a product (e.g., chatbot, search assistant, content generator).

Real user interactions are tracked and analyzed using logs and feedback mechanisms.

Techniques like A/B testing, feature flagging, and incremental rollout are used.

Online Evaluation Metrics: (Grouped)

1. User Engagement

Measures how actively users interact with the model.

Session Length

Messages per session

Conversation depth (turns)

2. User Satisfaction & Feedback

Explicit Ratings (thumbs up/down, stars)

CSAT (Customer Satisfaction Score) surveys

Qualitative Feedback: “Was this helpful?” comments

3. Task Success Rate

Measures whether the LLM helped complete a user task (booking, answering, summarizing).

Example: “Was the email draft usable?” or “Did the user retry or abandon?”

4. A/B Testing

Users are split into control and test groups.

Each group interacts with different versions of the model or prompt strategy.

Metrics are compared to determine the superior variant.

5. Latency & Performance

Response Time: Time taken to generate the output.

Token Generation Speed: Tokens/sec rate (especially important in streaming outputs)

6. Retention & Churn

How often users return or continue using the LLM-powered system.

Drop-offs may indicate dissatisfaction or hallucinations.

7. Behavioral Metrics

Query Reformulation Rate: Indicates dissatisfaction.

Escape Rate to Human Agent: In customer support bots.

Key Differences Between Offline and Online Evaluation

Text Generation Metrics

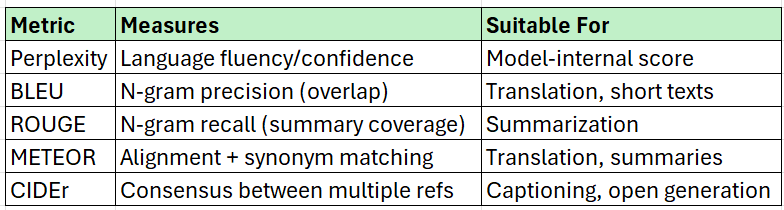

We'll use a pre-trained language model to generate text given a prompt and evaluate the output using:

✅ Perplexity (how confident the model is)

📝 BLEU (precision of n-gram overlap)

🧾 ROUGE (recall-oriented overlap)

✨ METEOR (semantic-aware match)

🧠 CIDEr (consensus metric for image captioning, adaptable for general generation)

You now have a working evaluation pipeline for LLM-generated text using multiple metrics in Python:

Use Perplexity to evaluate fluency

Use BLEU/ROUGE/METEOR/CIDEr for content quality

Sample Code

# 1. Install Required Libraries

!pip install transformers torch evaluate nltk

!pip install rouge_score

# 2. Load Model and Tokenizer (e.g., GPT2)

from transformers import GPT2LMHeadModel, GPT2Tokenizer

import torch

# Load model & tokenizer

model_name = "gpt2"

model = GPT2LMHeadModel.from_pretrained(model_name)

tokenizer = GPT2Tokenizer.from_pretrained(model_name)

model.eval()

# 3. Generate Text from a Prompt

prompt = "Once upon a time in a faraway land"

inputs = tokenizer(prompt, return_tensors="pt")

outputs = model.generate(**inputs, max_length=50, num_return_sequences=1)

generated_text = tokenizer.decode(outputs[0], skip_special_tokens=True)

print("Generated Text:\n", generated_text)

# 4. Calculate Perplexity

# Lower Perplexity = Better model predictions

# It indicates how well a model predicts the next token given previous tokens

import math

def calculate_perplexity(text):

inputs = tokenizer(text, return_tensors="pt")

with torch.no_grad():

outputs = model(**inputs, labels=inputs["input_ids"])

loss = outputs.loss

return math.exp(loss.item())

perplexity = calculate_perplexity(generated_text)

print("Perplexity:", perplexity)

# 5. Evaluate Text with BLEU, ROUGE, METEOR, CIDEr

# BLEU Score

# A metric that evaluates how many n-grams (unigrams, bigrams, trigrams, etc.) # in the generated text appear in the reference.

# Scores range from 0 (no match) to 1 (perfect match).

import nltk

from nltk.translate.bleu_score import sentence_bleu

# Download required tokenizer

nltk.download('punkt')

# Define reference and generated (candidate) texts

reference_text = "The cat is sitting on the mat."

generated = "A cat is on the mat."

# Tokenize

reference = [nltk.word_tokenize(reference_text.lower())] # list of list of tokens

candidate = nltk.word_tokenize(generated.lower()) # list of tokens

# Compute BLEU score

bleu_score = sentence_bleu(reference, candidate)

print("BLEU Score:", bleu_score)

# ROUGE Score

import evaluate

rouge = evaluate.load("rouge")

results = rouge.compute(predictions=[generated], references=[reference_text])

print("ROUGE Scores:", results)

# METEOR Score

meteor = evaluate.load("meteor")

meteor_score = meteor.compute(predictions=[generated], references=[reference_text])

print("METEOR Score:", meteor_score)

Image Generation Evaluation Metrics

Evaluating image generation models (e.g., GANs, diffusion models like Stable Diffusion, DALLE) requires specialized metrics to measure image quality, diversity, and similarity to real images.

Use FID + IS as standard baselines for most image generation tasks.

Use LPIPS and SWD for human perceptual quality and texture.

Use PPL when studying latent space interpolation quality.

Use KID as a robust FID alternative on small datasets.

Text-to-Video (T2V) Generation Evaluation

To evaluate how realistic, semantically aligned, and diverse the generated videos are compared to real videos or the input text prompts.

To evaluate Text-to-Video generation, use a multi-metric approach:

FVD + KID: Measures how realistic and distributionally accurate the generated videos are.

CLIPScore: Checks if the video matches the input text semantically.

FID + LPIPS: Evaluate visual clarity and perceptual similarity of frames.

KID is a superior alternative to FID for small sample evaluations, especially in research and rapid prototyping environments.

Conclusion

A well-rounded evaluation strategy is the foundation for building trustworthy, user-centric, and high-performance LLM applications. While offline evaluation offers control, repeatability, and benchmarking, online evaluation provides the real-world signals needed to adapt and improve LLMs over time.

To summarize:

Use Discriminative evaluation for tasks like classification, QA, and scoring.

Use Generative evaluation for open-ended text generation, summarization, or code output.

Combine metrics like Perplexity, BLEU, ROUGE, METEOR, and CodeEval for offline insights.

Embrace CLIPScore, user engagement metrics, satisfaction ratings, and A/B testing for online performance tracking.

By combining both offline precision and online adaptability, we can ensure LLMs not only perform well in benchmarks but also in the hands of end users — where it truly matters.

For more in-depth technical insights and articles, feel free to explore:

Girish Central

LinkTree: GirishHub – A single hub for all my content, resources, and online presence.

LinkedIn: Girish LinkedIn – Connect with me for professional insights, updates, and networking.

Ebasiq

Substack: ebasiq by Girish – In-depth articles on AI, Python, and technology trends.

Technical Blog: Ebasiq Blog – Dive into technical guides and coding tutorials.

GitHub Code Repository: Girish GitHub Repos – Access practical Python, AI/ML, Full Stack and coding examples.

YouTube Channel: Ebasiq YouTube Channel – Watch tutorials and tech videos to enhance your skills.

Instagram: Ebasiq Instagram – Follow for quick tips, updates, and engaging tech content.

GirishBlogBox

Substack: Girish BlogBlox – Thought-provoking articles and personal reflections.

Personal Blog: Girish - BlogBox – A mix of personal stories, experiences, and insights.

Ganitham Guru

Substack: Ganitham Guru – Explore the beauty of Vedic mathematics, Ancient Mathematics, Modern Mathematics and beyond.

Mathematics Blog: Ganitham Guru – Simplified mathematics concepts and tips for learners.